Key Takeaways

LLMs (Large Language Models) are the intelligence engines behind generative AI capable of understanding context, processing language, and generating human-like text.

LangChain is an AI development framework that connects LLMs with real enterprise data, tools, applications, and workflows.

LLMs alone cannot access databases, call APIs, or execute business logic LangChain adds action, automation, and system integration.

Combining LLM + LangChain enables secure, scalable, and enterprise-ready AI applications such as automation agents, knowledge assistants, document intelligence, and more.

Businesses across industries - BFSI, healthcare, e-commerce, real estate, and education - gain higher productivity and lower operational costs through intelligent

To build practical and compliant AI solutions, companies need expert development teams and a reliable partner - like a best AI development company in USA.

The future of AI will focus on autonomous decision-making, multimodal capabilities, and hybrid deployment models for enhanced security and performance.

Organizations that adopt LLM-powered automation early will drive innovation faster and gain competitive advantage in the digital transformation era.

What Is the Difference Between LLM and LangChain?

The core difference between LLM (Large Language Model) and LangChain in modern artificial intelligence development is straightforward:

LLM is the intelligence - the AI model that understands, processes, and generates human language using machine learning and advanced neural networks.

LangChain is the framework - it connects LLMs with business data, enterprise systems, APIs, and tools to take real actions in AI applications.

Think of it like this - in the world of AI software development:

| Example Analogy | LLM | LangChain |

|---|---|---|

| Human Body | Brain | Hands + Tools |

| Business Workflow | Intelligence | Action execution |

| What it provides | Text-based output | Functional AI solutions |

So while an LLM like GPT-4 can produce content using generative AI, it cannot:

- Search through your company database

- Trigger software actions

- Call APIs or run business automations

LangChain fills this gap by adding critical intelligent automation features:

- Retrieval-Augmented Generation (RAG) using vector databases

- Memory management for long enterprise conversations

- Tool usage (APIs, business software, knowledge bases)

- Multi-step workflow automation for real processes

LLMs = language understanding + content generation

LangChain = builds real enterprise AI applications powered by LLM

What Is an LLM (Large Language Model)?

A Large Language Model is an advanced deep learning system and a core component of generative AI development and natural language processing (NLP).

LLMs learn patterns, grammar, reasoning, and business logic from massive datasets:

- Books, articles, websites

- Business documents

- Source code and automation scripts

- Conversation datasets

- Enterprise knowledge bases

What Can LLMs Do?

- Text generation (blogs, emails, chats)

- Code generation for software development

- Data analysis, predictive analytics, insight extraction

- Sentiment analysis and NLP classification

- Translation and summarization

- Smart decision-making support for enterprise AI

LLMs are used across industries in:

- AI chatbots & virtual assistants

- Marketing content automation

- Risk analysis & compliance in BFSI

- Healthcare reporting & diagnostics

- Education & learning systems

LLMs help companies adopt AI development services to build digital, intelligent experiences.

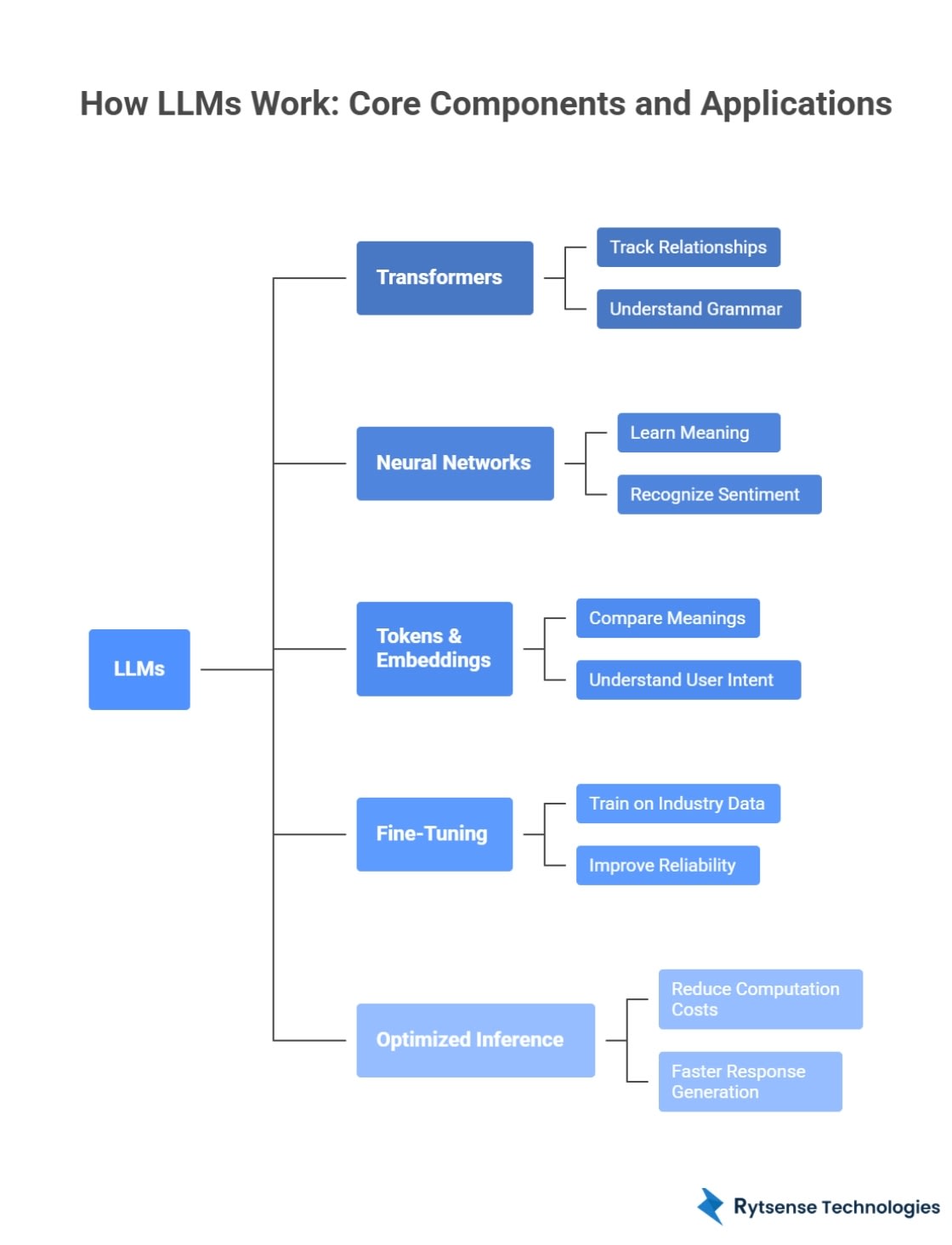

How Do LLMs Work?

LLMs work through a combination of advanced machine learning, neural networks, and massive amounts of training data. Their goal is to understand context, predict what comes next, and generate language that feels natural and meaningful - just like a human.

Below is a deeper look at the core components that power modern Large Language Models:

Transformers - The Engine Behind Language Understanding

Transformers are the primary AI architecture behind LLMs. They allow the model to:

- Track relationships between words in long sentences

- Understand grammar, tone, and intent

- Maintain context during long conversations

Transformers allow LLMs to keep track of previous messages and produce context-aware responses with high accuracy. This is why tools like GPT-4, Claude, Gemini are excellent at chatting, understanding questions, and writing high-quality content.

Neural Networks - Mimicking the Human Brain

LLMs use deep neural networks made of billions of parameters.

These networks:

- Learn meaning from patterns in data

- Recognize sentiment, intent, and emotions

- Improve accuracy through continuous learning

The structure establishes human-like reasoning — making LLMs powerful tools for AI development services and business automation.

Tokens & Embeddings - Understanding Meaning at Scale

LLMs don't understand entire sentences at once — they break text into smaller parts called tokens (words, characters, subwords).

Then embeddings convert these tokens into mathematical vectors.

This lets AI models:

- Compare meanings (e.g., king vs queen)

- Understand user intent beyond literal words

- Perform semantic search and data retrieval

This is essential for NLP applications like chatbots and document analysis.

Fine-Tuning - Training for Business Needs

A generic LLM is smart - but not always specialized.

Fine-tuning allows organizations to:

- Train the model on industry data (Healthcare, Finance, Legal, Real Estate, etc.)

- Improve reliability and compliance

- Reduce errors and hallucination

- Follow brand tone and knowledge

This is how a custom AI development company can build solutions tailored to a business.

Optimized Inference - Faster & Cost-Effective AI

Inference optimization ensures:

- Reduced computation costs

- Faster response generation

- Efficient deployment across cloud/on-prem systems

Techniques include:

- Quantization

- Model compression

- GPU & TPU acceleration

- Caching and decentralized computing

This enables enterprise AI deployment without sacrificing performance or security. They evolve into multimodal AI models capable of processing:

- Text

- Image

- Video

- Audio

This supports more advanced AI integration for business applications.

Top LLMs in 2025

| Model | Developer | Best Use Case |

|---|---|---|

| GPT-4 / o1 | OpenAI | Advanced reasoning in enterprise AI |

| Claude 3 Opus | Anthropic | Compliance-safe business insights |

| Gemini | Multimodal AI application development | |

| Llama 3 | Meta | Open-source custom AI development |

| Mixtral | Mistral AI | Cost-efficient AI models |

Businesses partner with the best AI development company in USA to select the right model based on:

- Data security (on-prem vs cloud)

- Operational cost optimization

- Industry-specific customization

What Is LangChain?

LangChain is an AI development framework helping companies build LLM-powered applications that:

- Connect to real enterprise systems

- Automate workflows with AI agents

- Use business-specific data securely

- Create production-ready AI solutions

Key Features of LangChain

| Feature | Benefit |

|---|---|

| Prompt Engineering Support | Higher accuracy & consistency |

| Memory handling | Continuous context for LLM apps |

| Tool & Agent Support | Executes actions, not just output text |

| RAG | Real-time & accurate information |

| API + DB Integration | Full business automation |

LangChain is a preferred tool for AI development companies, startups, and large enterprises.

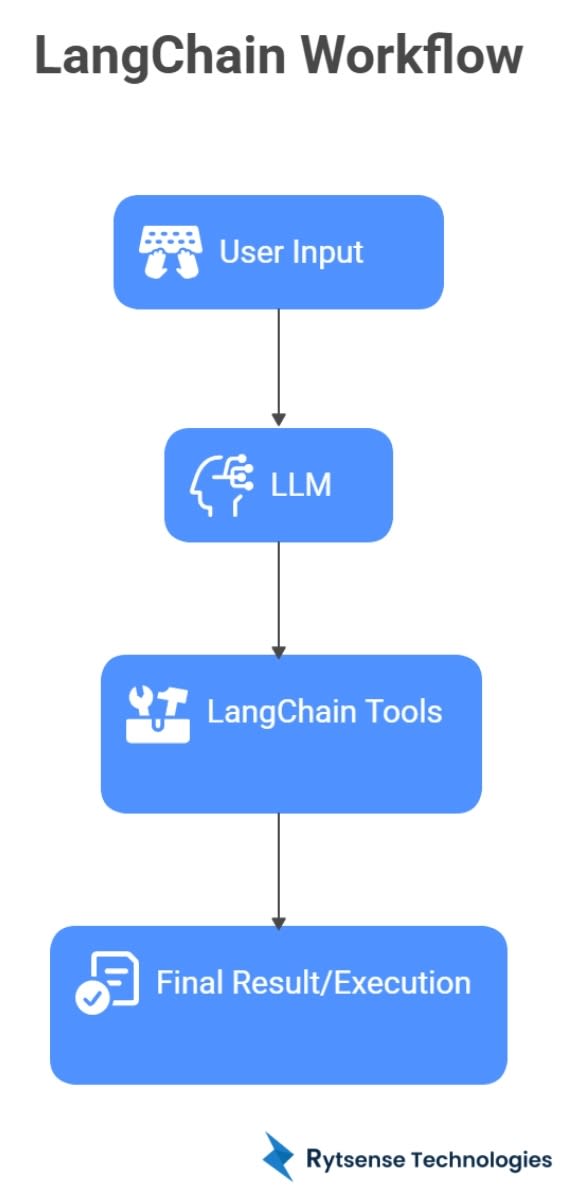

How LangChain Works in Technical Terms

Workflow of LLM + LangChain:

User Input → LLM → LangChain Tools → Final Result/Execution

LangChain helps LLMs:

- Query secure data sources (CRM, ERP, HRMS)

- Summarize massive enterprise documents

- Execute multi-step tasks operationally

Example for business automation:

"Show the top 20 customers this month and send a promotional email."

- LLM alone: Only generates response text

- LangChain: Pulls actual customer data + sends email automation

This is why companies hire expert custom AI solution developers.

LLM vs LangChain - Full Practical Comparison

| Category | LLM | LangChain |

|---|---|---|

| Core Function | Understanding & generation | Integration & execution |

| Real Data Usage | Limited | Complete access |

| Automation | Weak | Strong |

| AI App Development | Hard alone | Simplified |

| Business Role | Intelligence | Action + productivity |

Best Summary:

- LLM provides the brain

- LangChain converts that brain into a usable AI product

Business Use Cases - Where LLM + LangChain Work Together

They power enterprise-grade AI development services:

Healthcare AI

- Medical report summarization

- AI-driven clinical support

- Imaging & diagnostics analytics

Enterprise Automation

- AI agents managing workflows (CRM / ERPs)

- Automated compliance reporting

- Instant knowledge-based Q&A

E-commerce

- Smart personalization engines

- Search powered by vector embeddings

- Virtual product recommendation assistants

Real Estate

- Buyer-property matching using AI

- Investment ROI prediction

- Automated lead qualification

Finance (BFSI)

- Intelligent fraud prevention

- Policy & underwriting automation

- Customer support AI chatbots

Key Benefits:

- Reduced operational cost

- Faster decision-making

- Higher productivity

- Better customer experience

Which Should Your Business Choose?

| Scenario | Best Choice |

|---|---|

| Only content chatbot needed | LLM |

| Business data interaction | LLM + LangChain |

| Secure enterprise automation | LLM + LangChain |

| Custom workflows + APIs | LLM + LangChain |

That's why organizations work with expert AI development companies experienced in:

- RAG implementation

- Generative AI integration

- AI app development

- Private LLM deployment

- On-prem + cloud AI architecture

Limitations of LLM vs LangChain

LLM Limitations

- Hallucination without real-time data

- Expensive for large-scale inference

- Privacy issues, unless handled securely

LangChain Limitations

- Requires skilled AI developers

- Complex architectures for large pipelines

- Needs strong cybersecurity

Both technologies need secure development practices for enterprise success.

Future of LLM + LangChain in the AI Industry

The shift is from:

Content generation → Autonomous AI Decision-Making

Future advancements include:

- AI agents performing multi-step tasks independently

- More compliance-ready enterprise AI systems

- Full multimodal business intelligence

- Hybrid private + cloud LLM ecosystems

This empowers:

- SaaS AI product startups

- Business digital transformation

- Government & public sector modernization

Enable AI Transformation for Your Business

If your organization wants real AI, not just chat:

You need:

LLM + LangChain + Secure AI Integration — implemented by experienced AI developers.

Rytsense Technologies specializes in:

- Custom AI development

- Generative AI solutions

- NLP and machine learning integration

- Enterprise software automation

- AI-powered business workflow

Conclusion

Both LLMs and LangChain play crucial but very different roles in the evolution of modern artificial intelligence.

- LLMs provide the intelligence - Understanding context, generating content, and supporting advanced reasoning.

- LangChain enables execution - Connecting AI models to real-time business data, tools, and automation workflows.

To build enterprise-ready AI applications that deliver measurable value, organizations need the combined power of LLMs + LangChain. Together, they transform simple chatbots into smart, action-driven AI systems capable of improving productivity, reducing operational costs, and enabling informed decision-making.

As businesses continue accelerating digital transformation, the need for secure, scalable, and custom AI solutions will only grow. Partnering with the best AI development company in USA ensures the right architecture, compliance, and deployment strategy from day one - whether you're a fast-scaling startup or a global enterprise.

The future belongs to companies that don't just use AI - but integrate it deeply into their operations.

Now is the time to invest in next-generation AI development services and unlock smarter, faster growth.

Meet the Author

Karthikeyan

Connect on LinkedInCo-Founder, Rytsense Technologies

Karthik is the Co-Founder of Rytsense Technologies, where he leads cutting-edge projects at the intersection of Data Science and Generative AI. With nearly a decade of hands-on experience in data-driven innovation, he has helped businesses unlock value from complex data through advanced analytics, machine learning, and AI-powered solutions. Currently, his focus is on building next-generation Generative AI applications that are reshaping the way enterprises operate and scale. When not architecting AI systems, Karthik explores the evolving future of technology, where creativity meets intelligence.