What Is DevOps in AI? MLOps Guide for Scalable AI Development

Introduction

DevOps in AI, often called MLOps (Machine Learning Operations), is the practice of integrating software development and IT operations specifically for AI and machine learning workflows. It bridges the gap between data scientists, AI developers, and operations teams to ensure faster model deployment, continuous improvement, and reliable AI performance in real-world environments.

It enables businesses to build AI systems that learn, adapt, scale, and deliver value, not just exist as experimental prototypes.

1. What Is DevOps in AI?

While DevOps improves collaboration and automation for software development, AI DevOps (MLOps) is focused on the entire AI lifecycle — data collection, model training, versioning, deployment, monitoring, and retraining.

It supports:

- ✔ Data pipelines

- ✔ Machine learning model lifecycle

- ✔ Continuous integration & delivery (CI/CD) for AI

- ✔ Governance, compliance, and model performance tracking

👉 In simple words: DevOps ensures software runs successfully. MLOps ensures AI stays accurate and useful over time.

2. Why Do Businesses Need DevOps in AI?

AI adoption is skyrocketing across industries — but many organizations still struggle to move from proof-of-concept to production success. More than 80% of AI projects fail to scale because they lack a structured operational strategy to support machine learning in the real world.

Here’s why DevOps in AI becomes essential:

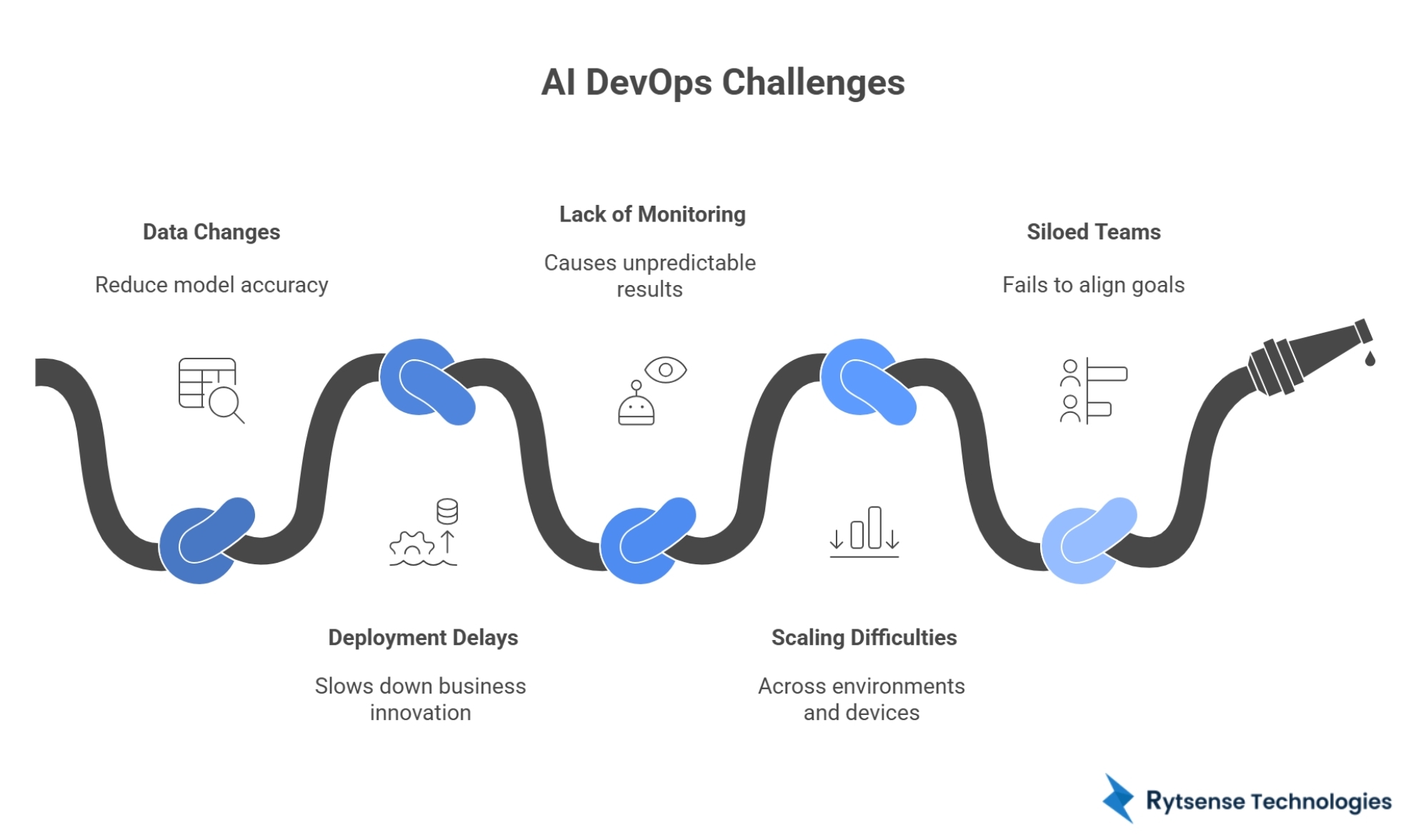

Common Challenges Without AI DevOps

🔹 Data changes reduce model accuracy: AI models degrade over time due to evolving customer behavior, market dynamics, and environmental conditions — a phenomenon called model drift. Without continuous monitoring and retraining, AI predictions quickly become inaccurate and misleading.

🔹 Deployment takes too long: Data scientists often build great models, but deployment gets delayed by manual workflows, dependency issues, and operational bottlenecks. This slows down business innovation and reduces competitive advantage.

🔹 Lack of monitoring causes unpredictable results: Unlike traditional software, AI can make wrong decisions even if the system is technically “operational.” Without proper observability and performance tracking, businesses risk compliance issues, reputational damage, and financial loss.

🔹 Models struggle to scale across environments: Running one model in a lab is easy — running hundreds across regions, devices, or cloud platforms is not. Scaling requires automation, containerization, and robust infrastructure management.

🔹 AI development is siloed between teams: Developers, data engineers, and data scientists often work separately. This lack of collaboration leads to communication gaps, slow release cycles, and systems that fail to align with business goals.

How DevOps in AI Solves These Challenges

- ✔ Accelerates AI application development: By introducing CI/CD for machine learning, teams can release new models faster with fewer failures and reduced rework.

- ✔ Protects AI performance from deterioration: MLOps continuously monitors ML pipelines, detects drift early, and triggers automated retraining keeping AI insights accurate and trustworthy.

- ✔ Ensures regulatory compliance and transparency: DevOps frameworks support versioning, auditing, data lineage tracking, and responsible AI governance essential for industries like finance and healthcare.

- ✔ Supports long-term AI transformation: Instead of one-off experiments, organizations build scalable AI ecosystems that improve continuously and generate sustained value.

- ✔ Reduces operational costs and risks: Automation eliminates repetitive manual effort, optimizes cloud usage, and reduces the risk of system errors or security breaches.

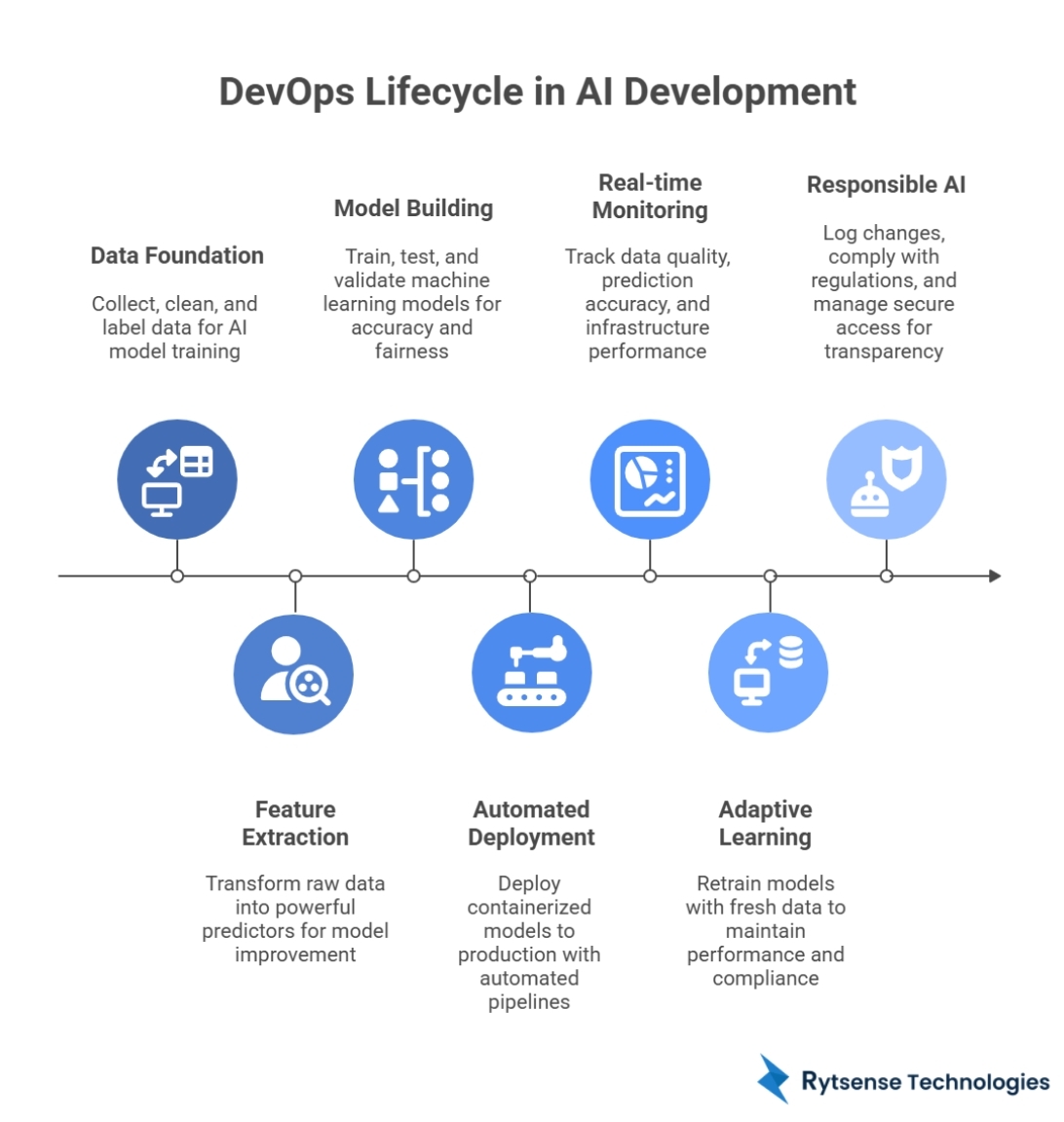

3. DevOps Lifecycle in AI Development

DevOps in AI introduces a continuous, cyclical approach to machine learning operations. Unlike traditional software projects that end at deployment, AI systems must be monitored, retrained, and improved continuously to remain trustworthy and effective.

Below is a breakdown of each stage in the AI DevOps lifecycle:

Data Sourcing, Cleaning & Labeling

Every AI model relies on high-quality data. This stage includes:

- Collecting data from internal and external sources

- Removing noise, duplicates, and inaccuracies

- Labeling and categorizing data for supervised learning

- Establishing secure and scalable storage systems

➡️ Goal: Create a clean, reliable, and compliant data foundation.

Feature Engineering & Exploratory Analysis

Before training, the right information must be extracted from raw data.

Key activities include:

- Identifying the most relevant features

- Handling missing values and anomalies

- Applying statistical methods to understand patterns

- Validating assumptions through exploratory data analysis (EDA)

➡️ Goal: Transform raw data into powerful predictors that improve model performance.

AI Model Development & Testing

Data scientists experiment with multiple algorithms to find the best fit.

Processes involve:

- Training machine learning models

- Evaluating performance across metrics (accuracy, recall, F1-score)

- Running validation tests to prevent overfitting

- Reviewing fairness, bias, and ethical standards

➡️ Goal: Build a trustworthy model that aligns with business objectives.

CI/CD Pipelines for Model Deployment

Once tested, the model is deployed into production environments.

Key practices:

- Containerization for consistency across platforms

- Automated deployment using CI/CD pipelines

- Shadow testing or A/B testing to minimize risk

- Secure API endpoints to integrate with applications

➡️ Goal: Deliver updates quickly and smoothly with minimal downtime.

Continuous Monitoring for Accuracy & Drift

Production performance may degrade as data changes over time.

Monitoring focuses on:

- Tracking real-time data quality

- Measuring prediction accuracy and behavior shifts

- Observing infrastructure performance and latency

- Alerting when critical changes are detected

➡️ Goal: Detect issues early and maintain reliable AI decisions.

Automated Retraining With Updated Data

When drift or performance issues occur, automated retraining kicks in.

This includes:

- Re-evaluating new data patterns

- Updating the model using fresh training data

- Revalidating fairness and compliance

- Redeploying improved models into production

➡️ Goal: Keep AI systems current, adaptive, and efficient over time.

Governance, Audits & Version Control

To ensure trust and accountability, every AI action must be traceable.

Practices involve:

- Logging changes in data, models, and code

- Complying with legal and industry-specific regulations

- Managing secure access and permissions

- Maintaining historical versions for rollback

➡️ Goal: Enable responsible AI at scale with complete transparency.

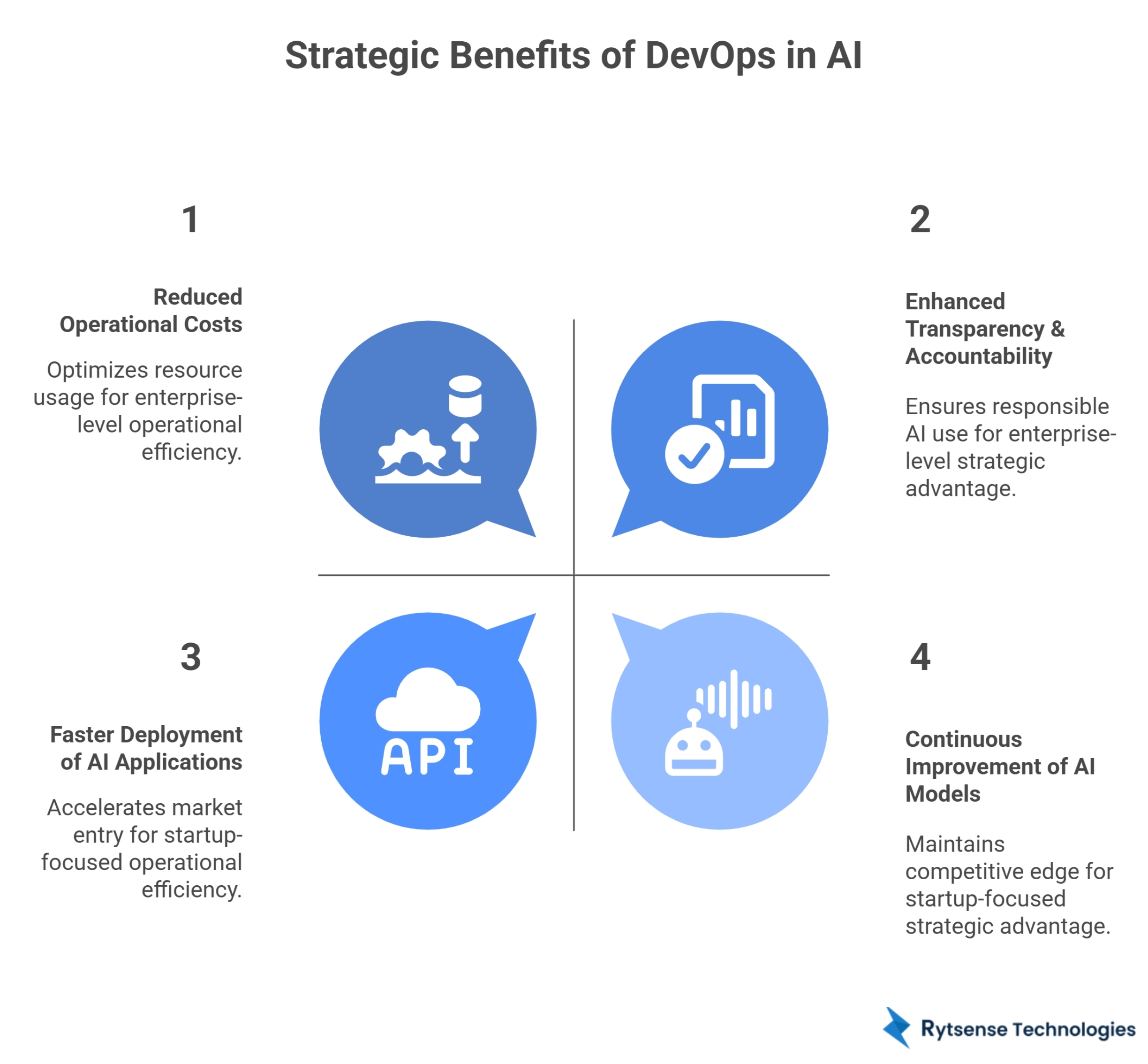

4. Benefits of DevOps in AI for Startups & Enterprises

Adopting DevOps in AI is not just a technical enhancement it is a strategic investment that drives faster innovation, cost savings, and long-term success. By turning machine learning into a repeatable and manageable process, organizations unlock the full potential of artificial intelligence.

Below are the key benefits explained in detail:

1. Faster Deployment of AI Applications

Traditional AI projects take months to launch due to disjointed workflows.

With AI DevOps:

- CI/CD pipelines automate integration and deployment

- Release cycles become shorter with fewer bottlenecks

- Teams can deploy new models or updates rapidly

➡️ Businesses get to market faster, turning ideas into real value quickly.

2. Continuous Improvement of AI Models

AI performance naturally declines as real-world data changes.

MLOps ensures:

- Automated monitoring of prediction quality

- Scheduled or trigger-based retraining processes

- Continuous feedback loop between production and development

➡️ AI systems stay accurate, fresh, and competitive over time.

3. Reduced Operational & Infrastructure Costs

Automation and governance prevent unnecessary resource usage.

DevOps in AI reduces:

- Manual workloads for data scientists and engineers

- Expensive rework due to failed deployments

- Overprovisioning of compute resources

➡️ Companies scale AI operations efficiently with optimized cloud spending.

4. Higher Accuracy & Consistency in AI Decisions

Human-driven testing is not enough for dynamic AI behavior.

MLOps ensures:

- Real-time evaluation of accuracy, bias, and model drift

- Quick remediation when predictions go off track

- Standardized best practices embedded into pipelines

➡️ AI becomes more reliable and trustworthy for mission-critical workflows.

5. Improved Collaboration Across Teams

AI success depends on cross-functional alignment.

MLOps:

- Unifies developers, data scientists, and operations teams

- Creates shared visibility into pipelines and model performance

- Eliminates silos that slow down AI delivery

➡️ Teams work smarter together, not separately.

6. Enhanced Transparency & Accountability

As AI becomes integral to business decision-making, accountability matters.

DevOps in AI enables:

- Complete tracking of model versions and outcomes

- Better explainability for stakeholders and authorities

- Stronger governance for ethical and compliant AI use

➡️ Organizations build AI systems that are responsible, auditable, and secure.

5. What It Means for Different Business Types

For Startups: Speed & Disruption

- Accelerates innovation and market entry

- Enables rapid experimentation and pivoting

- Reduces operational burden with automation

➡️ Helps startups innovate fearlessly while conserving resources.

For Enterprises: Scale & Stability

- Ensures reliable performance across global deployments

- Protects brand trust through transparent operations

- Integrates AI into complex business ecosystems

➡️ Helps enterprises scale AI confidently and sustainably.

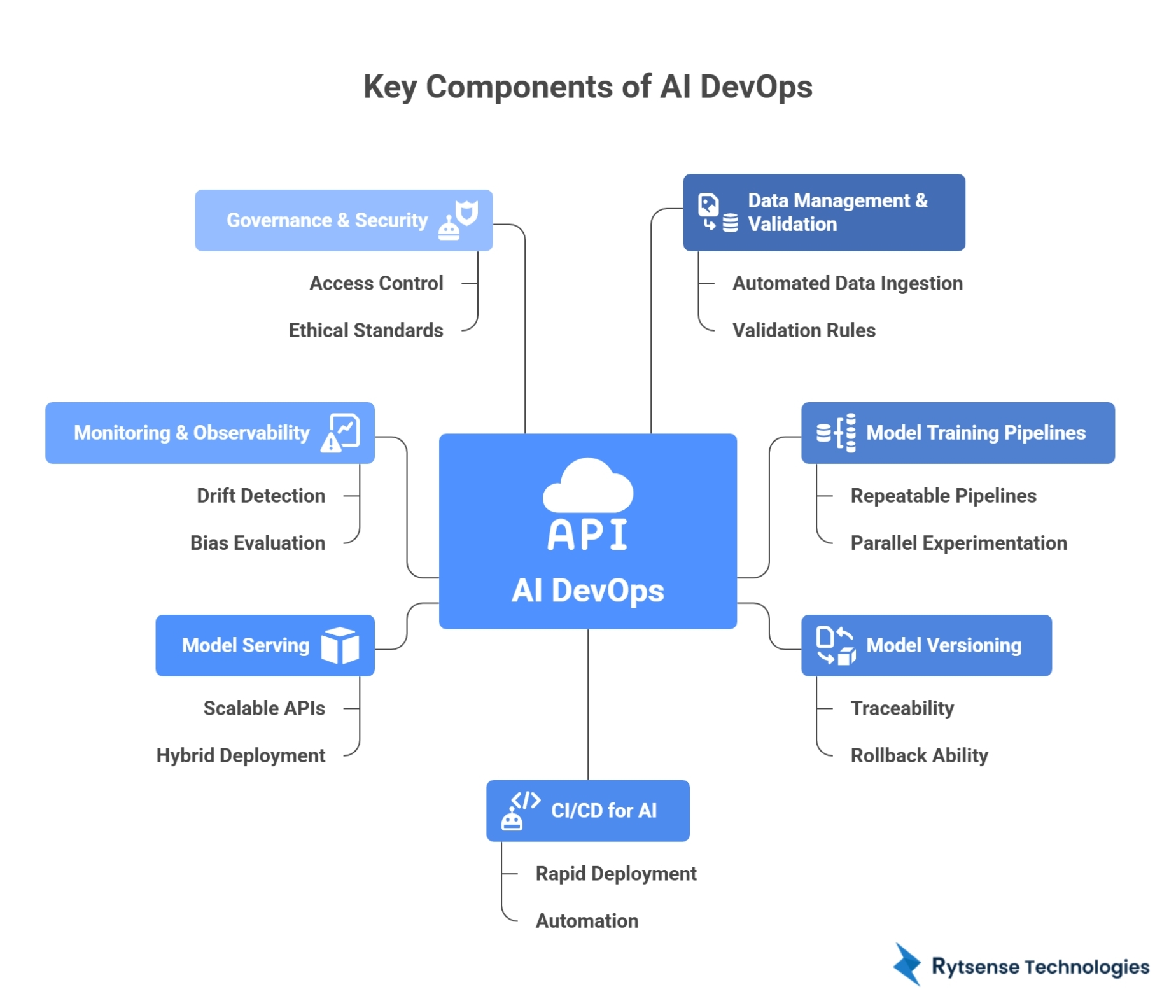

6. Key Components of AI DevOps

To successfully operationalize artificial intelligence, organizations must streamline every part of the AI lifecycle — from data ingestion to model deployment and ongoing optimization. DevOps in AI introduces a standardized, automated, and governed approach to ensure AI systems continue performing effectively at scale.

Below are the essential pillars that make AI DevOps work:

Data Management & Validation

Data is the foundation of any machine learning model. However, real-world data is often messy, incomplete, or inconsistent.

DevOps practices ensure:

- Automated data ingestion and preprocessing

- Validation rules to filter bad or biased data

- Feature tracking to maintain consistency across pipelines

➡️ Result: AI models learn from accurate, fresh, and trusted data, leading to better predictions.

Model Training Pipelines

Training machine learning models traditionally requires manual steps, slowing innovation.

AI DevOps enables:

- Repeatable and automated training pipelines

- Parallel experimentation at scale

- Hyperparameter tuning using automation

➡️ Result: Faster experimentation and improved model performance with reduced manual effort.

Model Versioning

Machine learning artifacts evolve with data — meaning one mistake can impact outcomes.

Version control for data, models, and features ensures:

- Full traceability and auditability

- Ability to roll back to earlier versions

- Comparison of different model experiments

➡️ Result: Teams confidently deploy the best and safest model every time.

CI/CD for AI Systems

Continuous Integration / Continuous Delivery is extended to ML models — known as Continuous Training (CT).

This ensures:

- Rapid deployment of new model updates

- Automation from testing to production rollout

- Reduced risk of deployment failures

➡️ Result: AI applications reach users faster and more reliably.

Model Serving

Models must be easily accessed and integrated into business applications — whether on cloud, edge devices, or internal systems.

AI DevOps supports:

- Scalable APIs for real-time decision making

- Batch and streaming inference

- Deployment across hybrid environments

➡️ Result: AI becomes usable across business workflows — not stuck in the lab.

Monitoring & Observability

Once deployed, model accuracy drops over time due to evolving user behavior and market changes.

Monitoring includes:

- Model drift detection

- Data quality monitoring

- Fairness and bias evaluation

- Performance and latency checks

➡️ Result: Early detection of issues ensures trustworthy AI at all times.

Governance & Security

With rising regulations, businesses must ensure AI remains compliant and ethical.

Governance measures include:

- Access control and model audit trails

- Responsible AI standards and explainability

- Data privacy protection and encryption

- Policy enforcement for sensitive environments

➡️ Result: Organizations deploy AI with confidence, transparency, and strong alignment to ethical standards.

Component Summary Table

| Component | What It Solves |

|---|---|

| Data Management & Validation | Ensures quality data for model training |

| Model Training Pipelines | Automates training workflows |

| Model Versioning | Tracks model evolution and rollback |

| CI/CD for AI | Enables fast deployment and updates |

| Model Serving | Improves delivery to applications |

| Monitoring & Observability | Detects model drift and errors |

| Governance & Security | Ensures compliance and responsible AI use |

This structured approach transforms AI from experiments into enterprise-grade AI systems.

7. Challenges in AI DevOps & Solutions

Although AI has the power to transform industries, the journey from experimentation to enterprise-wide adoption is filled with challenges. DevOps in AI helps organizations overcome these barriers by introducing automation, governance, and cross-team collaboration throughout the machine learning lifecycle.

Let’s break down the major challenges and how MLOps solves them:

Challenge 1: Unstable Model Accuracy

As customer behavior, market data, and environments shift overtime, model accuracy declines — this is known as model drift.

Impact if unchecked: Inaccurate predictions → Wrong business decisions → Loss of trust

✔ AI DevOps Solution:

- Real-time model performance monitoring

- Automated alerts when drift is detected

- Continuous retraining with new data

- Side-by-side comparison of model versions

➡️ AI stays relevant, reliable, and aligned with current business needs.

Challenge 2: Difficult Collaboration Between Teams

Data Scientists, Developers, and IT Ops often work separately using different tools and processes.

Impact: Slower releases, miscommunication, increased failure rates

✔ AI DevOps Solution:

- Unified pipelines and shared workspaces

- Standardized workflows across disciplines

- Clear ownership from data to deployment

➡️ Stronger teamwork leads to faster delivery and higher quality outcomes.

Challenge 3: High Cost of Scaling AI

AI workloads demand GPUs, distributed systems, and continuous compute — which can be expensive if unmanaged.

Impact: Budget overflow and wasted infrastructure resources

✔ AI DevOps Solution:

- Cloud-native architectures for scalability on demand

- Containerization (e.g., Docker, Kubernetes) for resource efficiency

- Cost observability and auto-scaling policies

➡️ Enterprises scale intelligently while optimizing operational cost.

Challenge 4: Model Compliance & Security Risks

AI must comply with evolving regulations related to data privacy, fairness, explainability, and risk management.

Impact: Legal penalties, reputational damage, loss of customer trust

✔ AI DevOps Solution:

- Built-in audit, data lineage tracking, and access control

- Explainability frameworks to justify model decisions

- Automated policy enforcement and governance dashboards

➡️ AI becomes secure, ethical, and compliant by design.

Challenge 5: Deployment Delays & Operational Bottlenecks

Manual deployment of AI models is slow, error-prone, and difficult to maintain.

Impact: Models get outdated before reaching production

✔ AI DevOps Solution:

- CI/CD pipelines designed especially for ML workloads

- Safe deployment strategies (shadow mode, A/B testing)

- Automated rollback and version management

➡️ AI deployments become predictable, scalable, and fast.

8. DevOps in AI vs Traditional DevOps

Traditional DevOps practices focus mainly on software delivery efficiency — ensuring applications are deployed quickly, run smoothly, and stay resilient. However, AI introduces new variables, most importantly data, which changes constantly and directly affects system behavior.

Below is a deeper comparison to highlight why AI systems need their own operational model:

| Feature | Traditional DevOps | AI DevOps (MLOps) |

|---|---|---|

| Artifacts | Code | Code + Data + Models |

| Testing | Fixed test cases | Continuous testing due to changing data |

| Deployment | Predictable | Dynamic and real-time changes |

| Team Collaboration | Developers + Ops | Developers + Data Scientists + Data Engineers + Ops |

| Performance | Software speed & stability | Model accuracy, fairness & trust |

AI is never “done.” Its performance depends on the real-world data it continues to learn from.

9. Real-World Use Cases of DevOps in AI

🏦 Banks: Fraud detection models automatically retrained based on new threats

🛍️ Retail: AI recommendation engines updated with current trends

🚚 Logistics: Predictive analytics optimized for real-time supply chain data

⚕️ Healthcare: Accuracy tracking for diagnostic AI systems

🤖 Manufacturing: Quality defect detection models improved continuously

If AI is the brain, DevOps ensures the brain keeps learning effectively.

10. Future Trends: AI + DevOps + Automation

Emerging trends transforming AI DevOps:

- Self-healing AI systems

- AutoML with zero-code pipelines

- AIOps: AI for IT operations automation

- Predictive governance for responsible AI

- AI deployment on edge & IoT devices

- LLMOps for generative AI applications

AI DevOps is evolving into a fully autonomous AI lifecycle.

11. How to Get Started With DevOps in AI

Whether you're a startup or enterprise, begin with:

- Define business outcomes for AI development

- Build scalable data pipelines

- Introduce CI/CD for AI workflows

- Choose the right DevOps tools & infrastructure

- Implement continuous monitoring & retraining

- Partner with an expert AI development company

A strong foundation determines how far your AI can scale.

12. DevOps Tools for AI Development

Modern MLOps teams rely on a stack of powerful tools to manage their workflows. Popular tools include:

- CI/CD & Automation: GitHub Actions, Jenkins, Azure DevOps

- Model Management: MLflow, Weights & Biases, Vertex AI

- Orchestration: Kubeflow, Airflow, Prefect

- Serving: TensorFlow Serving, TorchServe

- Monitoring: EvidentlyAI, Seldon Core

A reliable AI development services partner can guide tool selection and implementation.

13. Final Thoughts

DevOps in AI is not just a technology practice — it’s a business survival strategy. To fully unlock the value of artificial intelligence, companies must:

- Deliver AI faster

- Operate AI more reliably

- Continuously maintain accuracy and trust

Businesses that adopt MLOps today lead their market tomorrow. If you're planning to integrate AI with strong governance, automation, and scalability, you're already ahead.

Meet the Author

Karthikeyan

Connect on LinkedInCo-Founder, Rytsense Technologies

Karthik is the Co-Founder of Rytsense Technologies, where he leads cutting-edge projects at the intersection of Data Science and Generative AI. With nearly a decade of hands-on experience in data-driven innovation, he has helped businesses unlock value from complex data through advanced analytics, machine learning, and AI-powered solutions. Currently, his focus is on building next-generation Generative AI applications that are reshaping the way enterprises operate and scale. When not architecting AI systems, Karthik explores the evolving future of technology, where creativity meets intelligence.