-

Key Takeaways

- Fairness measures help prevent AI systems from making any kind of biased decisions based on gender, age, race, or other kinds of personal characteristics.

- These systems also build stronger trust of users and help companies to reach larger markets effectively.

- Fairness should be considered throughout the entire AI development process starting from data collection to ongoing monitoring after deployment

- If you implement fairness measures, it helps companies avoid legal fines, problems, and reputation damage.

- Fairness in AI isn't optional; but it's essential, which protects people from harm while you create better business outcomes for all.

Eliminate bias, boost trust—create AI that works for everyone.

What Purpose Do Fairness Measures in AI Product Development Serve?

AI is highly prevalent across various sectors, especially in the medical field, where AI programs assist doctors, and in mobile applications that are used every day. With the ever-growing impact of AI, there is an equally pressing concern regarding what purpose do fairness measures in ai product development. This question highlights one of the most pressing discussions in the current technology era.

Fairness measures are crucial for AI system functionality for all users and for AI product development for a variety of reasons.

Preventing discrimination

The most important objective is to prevent discrimination in decisions made by AI systems, for example, discrimination based on ethnicity, race, gender, age, etc. Without fairness measures, AI systems will continue to learn from biased historical data and perpetuate the same discrimination.

Building trust

AI systems that are designed to treat all users fairly will enable users to trust such systems, and as a result, use them. For example, a shopping site that treats all users equally by providing good and useful recommendations to all customers will be trusted more by users.

Legal compliance

There are laws that many countries have put in place to prevent discrimination. Businesses have to ensure their AI systems do not violate such laws in order to avoid legal fines and suits.

Loss of corporate image and business problems

AI systems that are not designed to be fair can expose a company to legal action and tarnish the company’s reputation, and thus the business problem. Fair AI aids companies in avoiding such business image and legal risks.

Fairness measures enable the AI to work appropriately for all types of people, not just certain groups. This enhances the usefulness and effectiveness of AI.

These measures are collected and implemented through the collection of data, training AI, testing, and with the system in use to catch and mitigate unfair actions as early as possible.

The aim of product development fairness policies is vital to comprehend for the stakeholders involved in the development and operation of the AI system. Such policies seek to make certain that the AI system operates in a democratic manner and does not make biased decisions based on ethnicity, gender, age, or other societal attributes. We're about to see more and more about this topic in a language that is accessible to everyone.

AI fairness in a nutshell

Before we elaborate on what purpose do fairness measures in ai product development, we should first explain AI fairness. AI fairness focuses on the concern of discrepancies in the opportunities and the outcomes that various social groups use and receive, as influenced by artificial intelligence systems functioning in society.

Fairness is defined as the principle that all human beings are of equal moral standing and must be free from harm on the basis of discrimination. When discussing fairness and AI, it is essential to design, develop, deploy, and use AI Systems that will never cause discriminatory harm. When discussing fairness and AI product development, the question is how to create AI that treats every person fairly right from the start.

Also Read:

Benefits of AI in software developmentWhat is the purpose of AI product development?

Fairness measures are to protect the people from any discrimination and provide equal chances, build trust, and overall enhance AI systems. Harnessing the power of AI calls for fairness measures to be done with a lot of consideration.

Our devotion to upholding ethics and fairness will determine our future with AI. Knowing and implementing fairness measures helps to build AI tools that will not only work perfectly but will also embrace the society's values of justice and equality. It is the ethical obligation of every organization working on AI technologies to ask themselves what purpose do fairness measures in ai product development is and to put them into action.

AI fairness is not a "nice to have." It is a necessity. Begin today to implement measures of fairness in AI and help to build a future where AI can be of equal advantage to all.

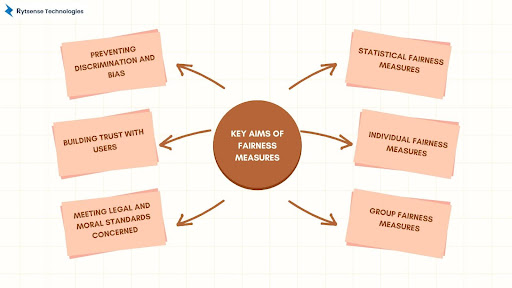

The Key Aims of Fairness Measures

To grasp what purpose do fairness measures in AI product development, it is vital to consider some of the critical areas where these measures actually make a difference.

Preventing Discrimination and Bias

The most important answer to what purpose do fairness measures in AI product development serve is discrimination prevention. AI learns based on the information fed to it, and if the information has inequalities entrenched in it, the AI is likely to perpetuate those inequalities.

Take, for example, the traditional gender biases held in the employment industry. An AI trained on historical data when most jobs were male-dominated is likely to “favor” men for those roles, perpetuating the unfair bias. Scholars and practitioners have suggested different strategies to combat bias in AI. Some of these strategies are data collection, model selection, and data-driven decisions.

Fairness measures in AI product development help the developers to identify these issues during the design phase and make the necessary adjustments prior to the system going online and automating tasks that will affect real people.

Building Trust with Users

One of the most critical aspects that measure does fairness analyses in AI product development serves is trust. People are more comfortable and ready to engage with an AI system when they are sure that the system has been configured to ensure fairness and equality.

Imagine online shopping sites that make recommendations. If customers realize that the platforms suggest overpriced goods to wealthy customers and inexpensive goods to everyone else, customers might lose trust in the system. If the system offers valuable recommendations and assistance to all customers, trust in the system will increase.

Meeting Legal and Moral Standards Concerned

The objective of fairness in AI product development also includes obligations that are legal in nature. Many nations have legal documents that state companies are prohibited from discriminating against persons because of their race, ethnicity, sex, years of age, or other protected class. These laws also apply to AI systems.

Complying with ethical norms helps to manage risks of spending sensitive, biased algorithms, and privacy breaches.

Companies that do not incorporate fairness principles in AI development are likely to face legal action, fines, and reputational harm.

When you think about the what purpose do fairness measures in AI product development focuses on, the objectives set by the developers, focusing on measures that apply to AI systems.

Statistical Fairness Measures

The statistical fairness measures analyze the algorithms for bias regarding predefined attributes such as age or gender. One of the attributes of AI Fairness 360 is bias mitigation. It uses algorithms for bias mitigation and has machine learning fairness metrics. It is capable of detecting and mitigating bias both at the model and dataset levels. It also has around 70 fairness metrics and 11 algorithms designed to mitigate bias.

The AI fairness measures may provide answers to the following questions:

- Is the rate at which loan applications are accepted or rejected among different racial groups the same?

- Are the job recommendations for male and female users of the service of equal quality?

- Is the quality of medical diagnosis systems the same for different age groups?

Individual Fairness Measures

Individual fairness measures focus on the fairness of the same person. It is the opposite of group fairness, with a focus on classifying people into different groups. It ensures that people closely matched on pertinent attributes receive similar outcomes from the system regardless of the group they belong to.

Group Fairness Measures

The group fairness measures consider the system outcomes across different groups of people. They also restrict any group from being unfairly and consistently targeted by the AI system.

Know More:

Impact of AI on Software DevelopmentHow Fairness Measures Work in Practice?

The practical applications of fairness measures broaden our understanding of how these measures aid in the AI product development process.

While Data Gathering

Selecting bias-free data collection is very significant to guarantee fairness in AI systems. Setting up a data collection policy provides AI fairness requirements like inclusion and demographic coverage to prevent data bias. Developers must gather data on a diverse population to improve fairness in AI systems.

Incorporating data from all demographics is very important. Developers pulling data from a singular demographic means that AI will work for that demographic only. The AI algorithm will inevitably operate optimally for only that particular kind of user.

During AI Training

Throughout the model-building stages, fairness metrics and their evaluation are critical to the model’s performance. During the evaluation and training of your model, assessing the fairness metrics is equally important to help prevent bias and refine the model.

To improve fairness in AI systems, developers ensure that fairness metrics are met. When problems are detected, developers are able to alter the conditions to improve fairness.

During Validation and Testing

To ensure that the metrics are met, AI systems are tested on all user examples. Any systematic failures, solutions, and any fairness issues are captured, and these problems must be resolved for the AI to operate optimally for all users.

After Deployment

Developers still keep an eye on fairness metrics even after an AI system is put into use. This enables them to address new issues that arise with an AI system over time and resolve them effectively.

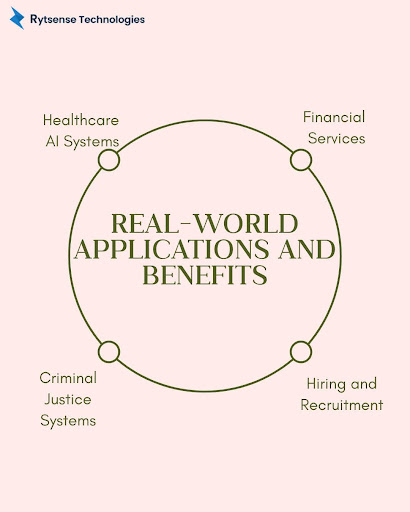

Real-World Applications and Benefits

Examining real-world scenarios sheds light on the importance of fairness measures in the development of an AI product.

Healthcare AI Systems

AI systems in the healthcare sector assist physicians in the diagnosis and treatment of various diseases. Measures of fairness ensure that healthcare AI systems function appropriately for all populations. An AI system might diagnose diseases in men far better than in women, or be better for younger patients than older patients. Without fairness measures, this is what could happen.

Financial Services

AI is used by banks and other financial institutions to evaluate an applicant’s eligibility for a loan or credit card. Fairness measures ensure that financial race and gender biases do not interfere with credit decisions.

Hiring and Recruitment

AI helps a lot of companies in making decisions on who to hire. Fairness measures ensure that all qualified candidates, regardless of their backgrounds, have equal chances to be selected.

Criminal Justice Systems

Certain courts now utilize AI technology to assist them in deciding on bail and sentencing.

These decisions require extreme fairness precautions as they can negatively impact lives.

Read Also:

AI Decision MakingChallenges in Implementing Fairness Measures

The importance of fairness measures in AI product development is something to consider, but fairness measures are not easy to implement due to a multitude of reasons.

Technical Problems

Creating a fair AI system is not an easy task, as it requires a lot of different specialized skill sets and tools to provide effective fairness measures. There are many approaches to fairness issues in AI algorithms, but it can be very difficult to make the right choice.

Different Types of Fairness Measures

Different fairness measures can conflict with each other. Somehow, an AI system can be fair on one end but be completely unfair on the other end. Developers have to find the right balance among all these differing aspects of fairness.

Data Restriction

Sometimes, a lack of diverse data sets to build a truly fair AI system can pose problems to the developer and build an unfair system. This is particularly an issue in specialized fields.

Figure of Fairness

What fairness constitutes might be different from one person or culture to another. Something that is fair, especially in a group, might not be fair to another group.

Understanding fairness measures in AI product development is important from a business perspective.

Risk Management

AI systems that are unjust put businesses at risk for legal action, regulatory fines, and reputational loss. Organizations are legally bound to uphold morals and fairness in their AI products. There is social responsibility for any technology bias that affects socially marginalized groups.

Market Expansion

Doing well in AI enables catering to a wider and more varied population. This is likely to increase revenue and new business growth opportunities.

Employee Satisfaction

Organizations with more fairness in AI systems tend to have more satisfied employees and could take pride in working for a more ethical business.

Innovation and Quality: Successful AI systems usually have a high level of system integration. The development of fair AI systems usually enhances system quality and creates more innovative AI.

Your AI should be powerful—and fair to every user.

Best Measures to Consider When Implementing Fairness

Here are some recommendations for companies wondering what purpose do fairness measures in AI product development and how to implement them:

Start at the Very Beginning

Considerations of fairness should be made at the very start of any AI project. Adding fairness to a system is more difficult and more expensive than simply incorporating it from the start.

Bring in Diverse Teams

Diverse teams help businesses address fairness concerns that whiter, homogeneous teams would overlook.

Ongoing Monitoring

Regular fairness checks are essential to an AI system. AI systems are supposed to be monitored continuously to ensure they are fair.

Stakeholder Engagement

Involving community members from the affected areas makes sure that fairness measures address relevant concerns and needs.

Continuous Enhancement or Improvement

New measures of fairness and their outcomes build trust when systems are put in place to ensure ongoing refinement.

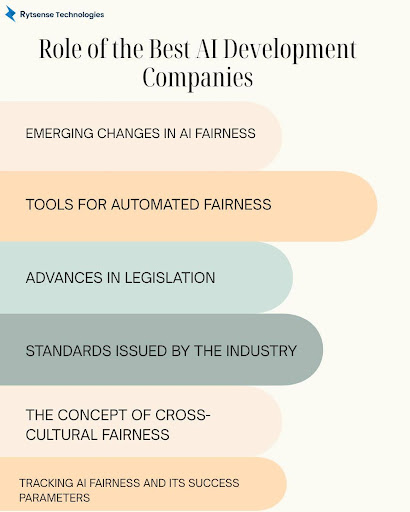

The Role of the Best AI Development Companies

The best AI development companies in usa are keenly aware of fairness measures, and so are the best partners to consider. Leading companies in this field are experts in the construction of fair and ethical AI systems.

The top AI development company in the USA will have worked with multiple fairness measures frameworks and will have the required technical know-how to put them into practice. Such companies will also comply with the legal and regulatory frameworks that govern specific industries and use cases.

Emerging Changes in AI Fairness

The AI fairness landscape is one that is constantly on the move. There is always new research that is being conducted in the area of fairness measures in product development and finding new ways in which they could be implemented.

Tools for Automated Fairness

New systems that are also able to automatically identify and correct fairness issues in AI systems are being developed. These systems will assist developers in ensuring that they have incorporated fairness issues into the AI systems.

Advances in Legislation

Countries around the world are working on new policies and laws that pertain to AI fairness. These policies are likely to make fairness measures mandatory for a variety of AI systems.

Standards Issued By the Industry

Associations have retrieved data in order to formulate a set of practices that will be acceptable to all industries in AI fairness and will be followed uniformly by all companies.

The Concept of Cross-Cultural Fairness

Researchers are trying to find ways in which fairness is regarded all over the world and devise ways of building AI systems that are acceptable in all societies.

Tracking AI Fairness and Its Success Parameters

Knowing how fairness metrics in AI product development help in tracking success is equally important. Businesses require clear metrics to evaluate their efforts to be fair.

Quantitative Metrics

This includes measuring the AI system’s discrimination against user groups using statistics. Equal opportunity rates, demographic parity, and equalized odds are examples of such metrics.

Qualitative Assessments

This involves users and affected community groups and gathers their feedback regarding the AI system’s impact on them.

Compliance Audits

Scheduled audits ensure that the AI systems are still meeting the fairness thresholds set out by the law and other rules governing equity.

Impact Studies

These are important to longitudinally study the various communities and understand the real impact AI systems have on them.

Why Choose Rytsense Technologies for Fair AI Development

When balancing fairness measures in AI product development, partnerships and collaboration are vital. Rytsense Technologies emerges on top for companies that are looking to design and build fair and ethical AI systems.

Rytsense Technologies understands fairness measures in AI product development and how to apply them precisely for your needs. Our experienced AI developers and data scientists create AI systems that are both effective and ethically balanced.

Here's why you should choose Rytsense Technologies for your AI development needs:

- Comprehensive Fairness Expertise: We help you implement the right measures for your use case. Our approach is industry-focused so that your fairness measures in AI product development are purposeful and effective.

- Proven Track Record: We help build fair and ethical AI systems in different sectors to improve user trust and regulatory compliance. We have extensive experience in supporting AI systems in healthcare, finance, hiring, and customer service.

- End-to-End Support: We assist with full support for AI fairness measures starting from the first consultation. Rytsense Technologies uses a tailored approach so that fairness measures in AI product development are purposeful.

- Regulatory Compliance: We help your AI systems remain compliant with any proper legal and regulatory requirements for fairness and non-discrimination.

- Training and Education: We help your internal teams practice certain fairness concepts and practices to ensure the long-term success and sustainability of your initiatives.

Put your AI systems at risk of bias and unfairness with Rytsense Technologies. Reach us today to understand how best to implement fairness measures in AI product development and make your systems effective and trustworthy. Our team is ready to help you understand what purpose do fairness measures in AI product development and successfully implement them in your projects.

Conclusion

Grasping what effectiveness fairness measures do in AI product development is not merely an academic concern in this age, while building or using AI systems, it is a practical reality for any organization. These measures prevent discrimination, enhance user trust, comply with laws, manage business risks, and ensure the effectiveness of AI systems for everyone.

Fairness is an intentional goal that is active, while bias is an error, usually unintentional, in the system. Organizations that prioritize fairness in technology development will not only thrive but will put themselves ahead of the competition.

Turn fairness into your AI’s competitive advantage.

The Author

Karthikeyan

Co Founder, Rytsense Technologies